In this article, you will learn how to get google to index your site faster, plus more tips to overcome common hiccups on crawling and indexing content to rank for keywords more quickly than your competition!

Yes, you can get Google to crawl your website and index content in less than 15 minutes.

What if Google ranks your new blog posts and web pages every time you publish them? How can people find your fresh blog post on Google SERPs within a few seconds?

In today’s SEO tutorial, you will learn why Google does not index your website, how to get search engines to crawl your site, and how to get search engines to index your site in less than 15 minutes. (I will show you how to do it step by step later)

I have tried a few methods to faster index my websites and new blog posts. I have found three effective methods to get Google to index your website and update web pages faster. In some cases, Google indexed URLs in less than 5 minutes.

Yes, less than 5 minutes.

Before we start learning how to index a new website and web pages on Google, let’s learn what Google indexing is and the reasons why Google does not crawl and index your web pages. These are very important as you can get rid of future Google updates and secure your content.

Recommended: How to Unlock Not Provided Keywords in Google Analytics

- What is Google Indexing, and How does Google Index web pages?

- Why Does Google Not Index Your Web Pages?

- 9 Reasons why Google doesn’t index your web pages

- Why should you index your content as quickly as possible on search engines?

- 2 Effective Ways to Get Google to Crawl Your Site

- Conclusion on how to get Google to index your site

What is Google Indexing, and How does Google Index web pages?

Site crawling and indexing is a complicated process that search engines use to get information on the internet. If you search your Twitter handle on Google, you will find your Twitter profile with the title of your name. Same for other information such as Image search.

Basically, before indexing a website, search engines must crawl the website. Before crawling a website, search engines should be able to follow the link.

There are many cases where webmasters don’t want search engines to follow a link. So in those cases, search engines cannot index the web page and rank it on SERPs.

Each search engine uses web spiders/web crawlers (also known as search engine bots) to crawl a web page. The famous Google web crawler is known as the Googlebot, which crawls the content around the Internet and helps gather useful information.

For the Bing search engine, it’s Bingbot. Yahoo search engine gathers web results from Bing. So Bingbot contributes to Bing and Yahoo search engines to add new content. Baidu uses a web spider called Baidubot. Yandex search engine uses Yandexspider to find new content for the Yandex web index.

Why Does Google Not Index Your Web Pages?

There are many reasons Google does not index your web pages. As you already know, web indexing is a three-step search engine ranking.

First, Googlebot follows the link (URL), crawls the web page to find helpful information, and then indexes Google databases.

When a person searches for something on Google, Google will use different factors (famously known as Google ranking factors) and list the result within a few milliseconds.

You can find whether Google has already indexed your website and web pages easily simply by doing a site index search. Site:pitiya.com

Ex: site:www.pitiya.com

9 Reasons why Google doesn’t index your web pages

You are not updating your blog regularly

This is one of the top reasons why Google doesn’t index your web pages. How do you convince Google that your blog is not dead if you don’t add new content and update older posts? Still, live?

The easiest way for Google to index your web pages every day is to update your blog. You don’t need to add more posts every day. Instead, update older posts. Add up-to-date content and information, and promote it on social networks using a tool like ContentStudio. So you will not only convince Google that you are an active blogger but also drive some extra traffic.

I have found that you can increase the search engine ranking of web pages by updating older content. The reason? Google’s content fresh factor.

When updating existing blog posts, don’t forget to follow these tactics as well.

- Make sure your content is on the topic: Sometimes, your post title may mislead readers. So, make sure your article fulfills everything as promised.

- Is your content unique?: According to Google, they get 15% of new search terms they have never heard of daily. That means there are a lot of opportunities for website owners to tap into. Unique content particularly gets a higher chance of ranking top on Google. For that matter, use metrics like the Golden Ratio and Allintitles of keywords. Check out these top KGR calculation tools to automate tasks.

- Make sure your article is grammatically correct: Writing error-full articles for your readers will lose loyalty and decrease the credibility you have built over time. So use Grammarly or other grammar checker tools like Grammarly to check punctuation and find grammatical mistakes.

- Use Synonyms to polish your article: Using the same meaning words or synonyms helps your article rank for more keywords. Use these synonym finder tools to generate more equivalent words.

- Add up more long-tail keywords: Long-tail keywords are easier to rank than head tails. So adding a few more relevant long-tail keywords won’t harm your article’s rankings but will increase targeted traffic. This article will teach how to find lower competition keywords with good traffic volume.

- Add relevant links: This will increase the efficiency of Googlebot crawling your blog. Add a few relevant inbound and outbound links to your article. Don’t overuse this, as adding more links will be harder to consume your content.

- Update older content: When you start updating posts, you will find that most contents that worked a few years ago are not complemented to this time. For instance, images used a few years ago is not corresponding to this time. So, make sure your readers don’t get outdated content.

- Curate content: Now, there are microblogging networks and third-party blogging platforms such as Google Blogger, Medium, Tumblr, Shopify, and Squarespace that lets you publish content. Use ContentStudio to publish your articles on those networks and increase the crawling efficiency. Learn more about ContetnStudio in this tutorial.

Google has penalized your blog

There are only two ways to penalize your website; Manual penalty and algorithmic update.

Do you know Google penalizes over 400,000 websites manually each month?

The main reason why many websites are penalized is that their backlink profile is not too good. Google Panda and Penguin are two very important updates that will help people find high-quality and relevant content on Google search results.

If Google has penalized your blog, you might be convinced that there is a significant drop in traffic. Go to your Analytics program (most people use Google Analytics, and others could use one or more of these real-time traffic analyzing tools) and filter overall traffic stats during the last six months or one year.

If you can see a vast, substantial traffic drop, Go to the Google Search Console dashboard and see whether Google has sent you an important message.

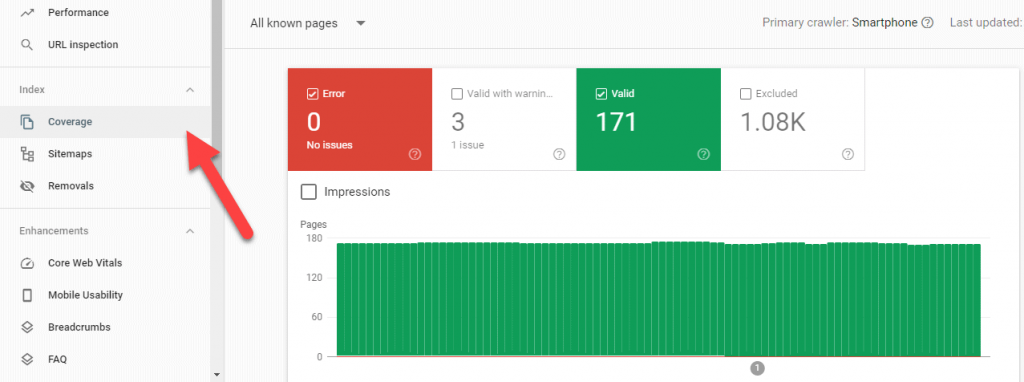

Crawl Errors

Many crawling errors are a BIG reason why Google doesn’t crawl your website and get new content into the index.

So go to Google search console and look for any crawl error such as Not found (404), Not response, Time out.

These errors will lose not only your customers but also your relationship with your website and Googlebot too.

Fix crawl errors as soon as possible. Then follow below third step to request to crawl again to Google.

Robots can’t Index your web pages

Have you mistakenly blocked search engine bots from crawling and indexing web pages?

So Google will not be able to index your web pages. Make sure you did not add robots header meta tags to prevent search engines from indexing certain important web pages, and use the Robots.TXT file to block search engine bots from following the links.

Google Search Console provides you with useful information about the site index.

You can see a live Robots.txt over here.

Have not enough quality backlinks pointing to web pages

Essentially, the more quality backlinks your site has, Googlebot and others will follow links and crawl your site to get faster indexing new posts.

Analyze how many backlinks your site has and which pages are linking using a tool like Semrush (Review). Alternatively, you can use a tool like BrandOverflow too. Learn more in this BrandOverflow review.

Poor interlinking strategies

Have you ever thought about why your quality, older web pages don’t get more traffic than they used to?

The reason is your interlinking strategy is poor. I have been talking much more about interlinking in this Google Blogger SEO guide and Tumblr SEO guide. Interlinking helps decrease website bounce rate, increase user engagement, and share the link juice within other web pages.

Internal linking is so much powerful. Your web pages can rank for hundreds of long-tail keywords just alone using proper internal-linking strategies.

Dead Pages

This results from poor interlinking and not having a sitemap for your blog. By generating more dead pages, search engines won’t find important web pages that must be ranked on Google SERP.

Dead pages are the ones that people and search engine bots cannot find on your site.

You must interlink a web page to another page at least once. Also, you should create a sitemap/Archive page on your blog, not only for search engines to find all indexable content but also for people to browse all posts deeply.

How to find your site pages are indexable by search engine bots

There is a way to determine if your site is correctly configured so that every post is linked somewhere within site. My favorite SEO software for it is WebSite Auditor.

Download WebSite Auditor over here and set up a project.

After crawling the entire site, Go to Site Structure > Visualization. Next, view the map based on the ‘Click Depth.’

Learn more in this WebSite Auditor review.

Embedding posts sitemap widgets into the 404 error (Not Found) page will also increase the crawl efficiency. If you use WordPress CMS, you can use this Google XML sitemap generator plugin to create one for your blog.

Your Web host is down!

Choosing a good web hosting company to host your website will make it super easy to blog. Even though there are lots of free WordPress web hosting services, you shouldn’t ever opt for them.

Either use a partially inexpensive WordPress hosting company like Bluehost or WPX or a WordPress-optimized hosting company like WPEngine.

You have removed a page from showing in Google

Did you use Google’s “Removals” tool to remove any URL from Google Index?

So, you might not have anything to do but edit the URL, add a 301 permanent redirect from an older post permalink to a new permalink, and get search engines to crawl your site again.

When you work with the Google Search Console, you should be careful and aware of what you are doing exactly. Wrong actions could resonate to remove URLs from Google index quickly rather than it takes to index on Google.

Why should you index your content as quickly as possible on search engines?

Now you know several reasons why Google doesn’t index your web pages and doesn’t rank them on Google SERP. Here are a few reasons why you should get search engines to index your site as quickly as possible.

- Rank your web pages quickly: why do you wait weeks or months to see your fresh content rank on Google SERP? What will it look like if Google ranks your new posts on Google search result pages within a few minutes?

- Prevent penalizing your website for duplicate content issues: Perhaps someone might steal your content and do some work to rank on Google. The most horrible thing is when their pages start outranking your post in Google SERP. Google might penalize your website for duplicate content issues. Therefore, the best thing to do right after publishing your new blog post is to get Googlebot indexed content as soon as possible.

- Increase Overall Keyword density: Google uses site relevancy and overall keyword density to rank web pages for search terms. You can find your website’s high-density keywords and keyword stemming in Google webmaster tools. By indexing new posts, you can increase the overall keyword density, so increase the search engine ranking of older posts.

- Convince Google That Your website is active: People love fresh content. So Google also loves websites that update so often and provide quality content. Next time you update your older posts and publish a new post, you should try getting Google to index your content quickly. This will be a good reason for Google to know that you update your blog regularly.

2 Effective Ways to Get Google to Crawl Your Site

#1. Google Ping

The best thing in RSS feed services that most SEOs like is the Ping services. Suppose you use Feedburner as the RSS feed service for your blog. You can use this method.

Step #1: Sign in to your Feedburner account and go to Feed Title >> Publicize >> Pingshot.

Step #2: Activate the Pingshot service.

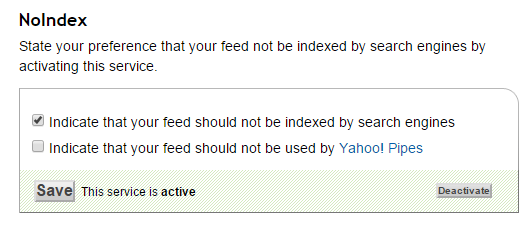

Step #3: Noindex RSS feed because you don’t want to create duplicate content on authority websites.

Besides the Feedburners Pingshot service, you can use an online Ping service to ping new URLs to RSS feed services and blog search engines. Here are a few online blog pinging services.

WordPress Ping List

If you are using WordPress and want to notify search engines about your new updated content as soon as possible, copy and paste this Ping list.

Paste these Ping URLs in the Update Services section under the Writing settings.

#2: Fetch as Google – Google Search Console

This is another method to get Google to crawl your site and index your website quickly. I like this method because I can crawl my entire website anytime. Another thing is that I can see at which time Google indexed the web pages.

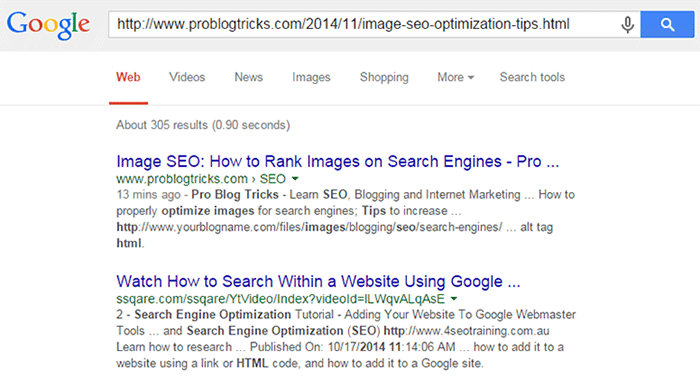

I submitted my previous post, Image SEO – How to rank Images on Search Engines to Google Index. Result? Google indexed it within a few minutes, and when I searched it again on Google, it showed me that before 13 minutes, Google indexed the web page. Here’re the steps of properly adding a web page to the Google index in Google webmaster tools.

Step #1: Make sure Google does not yet index your post. You can easily find it by searching your web page address on Google. In my case, I searched Image SEO post’s permalink first.

As you can see, the post is not yet indexed by Googlebot. Ensure you didn’t add the noindex tag on the web page, as it can prevent Googlebot from indexing it.

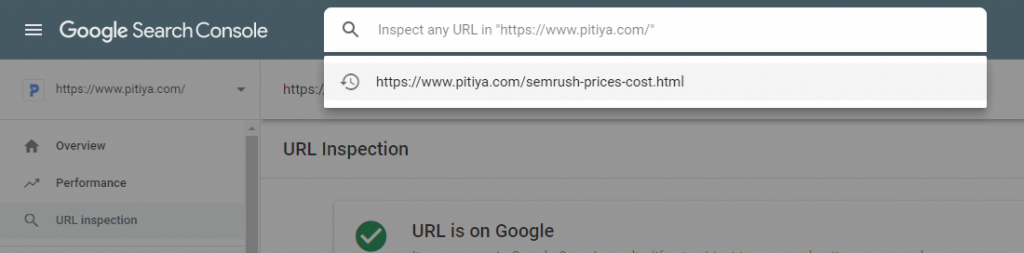

Step #2: Go to Google Search Console >> URL Inspection

Step #3: Enter the page URL that you want to inspect. Recently, I inspected this post on Semrush price increases and was able to submit it to Google Index manually.

Step #4: In the next step, you will see a popup box. It will show that the URL is being inspected. Wait a few seconds until it’s done. Once it is finished, click on the “Request Indexing” link.

Now Google will add your website to the index queue. Googlebot will soon crawl your page.

Step #5: Here’s the Google SERP when I search the URL again after 13 minutes of break.

Here is a video that explains how to use the URL Inspection tool.

Conclusion on how to get Google to index your site

Google’s URL Inspection tool is very useful when it comes to faster indexing a web page on Google.

It’s not guaranteed that your submitted URL will be crawled immediately and shown on Google SERP very soon. Learn more.

However, from an SEO point of view, these SEO tools are handier than other crappy tool that promises that they will help index your web pages on Google quickly.

The best way to protect your blog and build a strong foundation is by getting search engines to index your site as quickly as possible. Following the above three methods, you can quickly index your website on Google in less than ten minutes.

In most cases, I could get Google to crawl my new posts in under five minutes. I don’t have screenshots to prove that now. But, I know that you think that it is possible now.

So, How else can you get Google to index your website fast? Let me know in the comments below. I will respond to every comment.

Great article. I have recently learned how important indexing is and I appreciate you explaining to me some of the reasons why my page is not getting indexed faster. Thanks 🙂

Yes, Karen, web page indexing is very important in search engine optimization. Quicker your posts index, you will get trafficfrom search engines quickly. Glad you find this article is useful. Thanks for comment 🙂

informative blog on indexing of a website, but if i change one blog one or two times then it wouldn't effect the site performance.

Yes, the more you update the blog, Google will index your blog efficiently. Google likes fresh content. Thanks for sharing your ideas!

hi, thanks Chamal this is really helpful tips for me…now i can do it in fast way..

thanks

Pradeep, glad this guide helped you learn indexing webpages. Every time you any blog post, update existing one, follow this method.

hi, thanks for your guidance, but I have a question. Do you have any suggestions about how to get index for web 2.0. I know that blogspot, wordpress, tumblr are get index fast but the other site like beep.com get index slowly. Even though I submit it to google, ping, share social site but it gets no index.

Thank you.

If the site is new to internet, then it would take a few minutes to hours or sometimes dates to index in search engines. If you don't see Google index your website, then create some social signals (especially Google+ likes and shares, URL in YouTube description also works pretty well) through content sharing networks. And then verify your website with GWT and fetch as Googlebot. Your web 2.0 website/web pages should be indexed by Google within a few minutes.

Make sure you didn't block web-spiders from crawling web pages on your site. If you use fetch as Googlebot tool with having no-index tags on header, then it is violating Google terms.

Many thanks for your help. I will try to share social sites

Jenny, Follow above tips. Surely, your website will be listed on Google SERP within a few minutes. Let me know if i can help you in any other way. Thanks!

Chamal, thanks for writing these lines. I was trying to get my posts indexed quicker for a long time. Now, thanks to your method, I am able to do that. Hopefully this works for an already established website.

Yes, Sam, these tips work very well for already established websites as well. Do a try and let me know if it doesn’t work.

Chamal Priyadarshana Sir,

How i can index all my web page within a hour, i have over 200 web pages but only 3 pages are indexed, so how i can index quickly

Submit your blog’s sitemap page to Google.

Chamal Priyadarshana Sir,

How i can get sitemap in my blog and how i can get quick index in my all blog pages, please guide me. Please render steps

Hi Chamal,

I indeed this one is a great article shared. Yes if you want to rank your pages on Google, then you have to submit a sitemap to Google.

This is a very Crucial part of search engine optimization. Without it your website never rank on Search Engines.

Once again Thanks for sharing this wonderful article. 🙂

~Swapnil Kharche

Hi Swapnil,

Yes, of course, submitting a sitemap is very important in most cases.

Thanks for sharing your thoughts Swapnil!

Helpful article. It is really a common problem that we do not get google index quickly after publishing our posts. But it is not a good sign. Because if google does not index our posts or pages, we will not appear in SERPs. So we need the proper guideline. I think your post is full of perfect information. Thanks for sharing.

Yes, you are right munna. If search engines don’t see our pages, how could we expect to receive organic traffic? We can’t.

Glad you found these information helpful.

Very helpful article, It is a common problem that we do not get Google index quickly after publishing our posts. I think your post will surely help to solve this common issue. Thanks a ton.

In Search Console I am able to find only three menu items Home, All messages, Other Resources. Intially all the options appeared. Plz help me to fix the problem

I believe you’re in the Home page of Google Search Console where you can see all verified websites. It looks like you don’t have verified your website with Google. First submit your website to Google Search Console and then follow the steps.

Greetings,

I have a website i want to rank on google.

Can you help with the job ?

Greetings,

Please send details at chamal [at] pitiya.com.